Digitized and Automated Infrastructure is all we need !!!Before we begin learning and coding our infrastructure, let us know why we need it.

Think you have an infrastructure in AWS where you have multiple s3 buckets/instances/ELB/ALB/SG/ VPC/Gateways/NAT attached to these resources and most importantly everything is bought up manually.

?? What happens when some resource goes down (😨 😨) and most of them are dependent, you break your head in this mundane job searching and fixing your resources one at a time and testing everything.

?? What if we come up with an idea to write the infrastructure and enable debugging with fixing at a place where every resource will be up and running. And it is all about spinning and getting out of your infrastructure in less or no time. Here is what Terraform provides you .

Terraform enables you to safely and predictably create, change, and improve infrastructure.

It is an open source tool that codifies APIs into declarative configuration files that can be shared amongst team members, treated as code, edited, reviewed, and versioned.

Terraform Features:

- Infrastructure as Code

- Execution Plans

- Resource Graph

- Change Automation

Running a marathon using terraform ....

Let's not waste our time and start creating an s3 bucket in AWS using terraform.

Terraform supports almost all of the providers, I choose AWS to create my infra in this blog, You can use yours.

Before proceeding make sure you have an AWS account to create your infrastructure. Follow the steps to create a s3 infra :(Install terraform from Terraform Installation Guide )

mkdir ~/terraform_infra cd ~/terraform_infra touch s3.tf

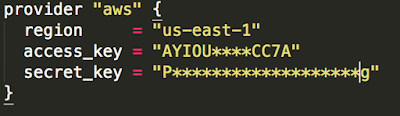

Provider.tf

The provider block here says we are using aws as our provider where :- region : Region where our resource will be created

- Key/Secret : Provided by AWS

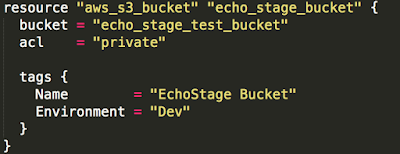

resource.tf

The resource block says what resource we are creating in infra.Here aws_s3_bucket is resource which terraform understands to create bucket

echo_stage_bucket is our reference to s3 bucket

- bucket: bucket name that will be created in aws

- acl: bucket type (public/private)

- tags: tags your environment

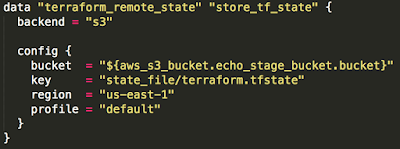

storage.tf

data block (terraform_remote_stage) is used to say terraform that where we want to store our terraform state fileThis is needed because once we create the infrastructure we need to version and maintain state if we loose it , we lost our infrastruture state , Here I am using s3 to store my tf state

- backend: where to store the state file

- bucket: bucket name we are referencing the bucket that we have created

- key : filename that will be saved

➜ terraform plan

+ aws_s3_bucket.echo_stage_bucket

acceleration_status: ""

acl: "private"

arn: ""

bucket: "echo_stage_test_bucket"

bucket_domain_name: ""

force_destroy: "false"

hosted_zone_id: ""

region: ""

request_payer: ""

tags.%: "2"

tags.Environment: "Dev"

tags.Name: "EchoStage Bucket"

versioning.#: ""

website_domain: ""

website_endpoint: ""

<= data.terraform_remote_state.store_tf_state

backend: "s3"

config.%: "4"

config.bucket: "echo_stage_test_bucket"

config.key: "state_file/terraform.tfstate"

config.profile: "default"

config.region: "us-east-1"

Plan: 1 to add, 0 to change, 0 to destroy.

Lets Create the infra :

➜ terraform apply

aws_s3_bucket.echo_stage_bucket: Creating...

acceleration_status: "" => ""

acl: "" => "private"

arn: "" => ""

bucket: "" => "echo_stage_test_bucket"

bucket_domain_name: "" => ""

force_destroy: "" => "false"

hosted_zone_id: "" => ""

region: "" => ""

request_payer: "" => ""

tags.%: "" => "2"

tags.Environment: "" => "Dev"

tags.Name: "" => "EchoStage Bucket"

versioning.#: "" => ""

website_domain: "" => ""

website_endpoint: "" => ""

aws_s3_bucket.echo_stage_bucket: Still creating... (10s elapsed)

aws_s3_bucket.echo_stage_bucket: Creation complete

data.terraform_remote_state.store_tf_state: Refreshing state...

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

The state of your infrastructure has been saved to the path

below. This state is required to modify and destroy your

infrastructure, so keep it safe. To inspect the complete state

use the `terraform show` command.

State path: terraform.tfstate

You would have got the terraform.tfstate state file in your local , Lets push this to created s3 bucket:Exceute:

➜ terraform-infra terraform remote config -backend=s3 -backend-config="bucket=echo_stage_test_bucket" -backend-config="key=state_file/terraform.tfstate" -backend-config="region=us-east-1" Remote state management enabled Remote state configured and pulled.

For any Queries Send Mail